Edge computing is a concept that has been gaining ground in industry for a multitude of reasons, the most prominent of which include the ability to process data in real time—since edge computers are on-site and/or on machine—and store data on site, which tends to ease many companies’ concerns about data storage in the cloud.

A recent decision by IEEE is likely to boost interest in edge computing even further. The IEEE Standards Association (IEEE-SA) has adopted the OpenFog Consortium’s OpenFog Reference Architecture for fog computing as an official standard. According to the OpenFog Consortium, the new standard, known as IEEE 1934, relies on the reference architecture as “a universal technical framework that enables the data-intensive requirements of the Internet of Things (IoT), 5G and artificial intelligence (AI) applications.”

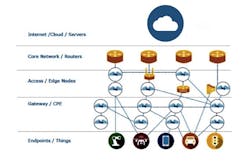

In terms of what this means for edge computing, it’s important to realize that the fog computing concept is essentially a network of edge computing devices. The OpenFog Consortium describes fog computing as “a system-level horizontal architecture that distributes resources and services of computing, storage, control and networking anywhere along the cloud-to-things continuum. It supports multiple industry verticals and application domains, enables services and applications to be distributed closer to the data-producing sources, and extends from the things over the network edges through the cloud and across multiple protocol layers.”

To read more about the difference between edge and fog computing, see this Automation World article: “Fog Computing vs. Edge Computing: What’s the Difference?”

The OpenFog Reference Architecture, released in February 2017, is based on eight core technical principles known as pillars, which represent the key attributes a system needs to be defined as OpenFog. These pillars are security, scalability, openness, autonomy, RAS (reliability, availability and serviceability), agility, hierarchy and programmability.

“We now have an industry-backed and supported blueprint that will supercharge the development of new applications and business models made possible through fog computing,” said Helder Antunes, chairman of the OpenFog Consortium and a senior director at Cisco. “As a consortium, we developed the OpenFog Reference Architecture with the intention that it would serve as the framework for a standards development organization. We’re pleased to have worked so closely with the IEEE in this effort as the result is a standardized computing and communication platform.”

The new fog standard, along with other fog computing-related technologies, use cases, applications and tutorials will be showcased at the fogcongress.com, October 1-3, 2018 in San Francisco.

About the Author

David Greenfield, editor in chief

Editor in Chief

Leaders relevant to this article: