How Vision Is Becoming More Viable for Automation

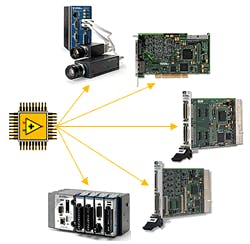

In a recent blog post, The Coming Age of Vision Guided Robots, the impact low-cost imaging sensors are having on the application of vision technologies in automation was discussed. To better understand, from an engineering viewpoint, how these new vision systems are capable of doing what they do at such a low price point, you need to look no further than the explosion in use of FPGAs in embedded systems.

“FPGAs (field programmable gate arrays) are well suited for highly deterministic and parallel image processing algorithms due to the inherent parallel nature of the FPGA itself,” says Carlton Heard, vision product marketing manager at National Instruments. “With a CPU, processes are serialized; therefore, even when using multi-threading technologies such as multiple cores or multiple separate machines, bottlenecks can still occur. FPGAs, however, can run multiple tasks in parallel with very little jitter and latency. Many image-processing algorithms are inherently parallel and, therefore, suitable for FPGA implementation.”

The image processing algorithms Heard refers to involve operations on pixels, lines and regions of interest and, therefore, do not need high-level image information, such as patterns or objects in the image. This means that imaging tasks can be performed on small regions of bits as well as on multiple regions of an image simultaneously. “You can pass the image data to the FPGA in parallel and, because a central processor is not required to process the data, the data can be processed concurrently,” says Heard. “This ability benefits high-performance applications, which require very low latency or jitter, because the FPGA can begin processing the pixels as soon as they begin arriving from the camera.”

Robotic visual servo control applications, as described in the blog post referenced above, is a perfect example of low-latency image processing because image processing and motion control can be contained within a single loop on the FPGA. Examples of image processing algorithms that work well on FPGAs include image transforms, bayer decoding, centroid measurements, thresholding and edge detection.

Heard cautions, however, that some vision algorithms do not divide well and must be serialized or wait until the entire image has been acquired for handling. In cases like this, an FPGA may actually slow down the process because FPGAs are highly parallel, which means their raw clock rates are significantly lower than today’s processors. This is an important point for machine designers, because the efficacy of their designs will ultimately depend on the vision algorithms being deployed. To help clarify, Heard explains that, while a processor may be able to run an algorithm at 2 GHz, an FPGA that has to serialize the algorithm may run at 100 MHz.

In some machine design process, an embedded vision application may use a combination of algorithms. Heard says this can be addressed by combining an FPGA and a processor in the same system. “In this type of architecture each chip can handle the algorithms for which it is best suited and even work in combination,” he says. “For example, the FPGA can handle the image acquisition and do pre-processing on the image before the processor performs a pattern match.”

For more information about the role of FPGAs in embedded machine applications, visit the special site we create with National Instruments to cover this topic at www.automationworld.com/nipac.