Smart Cameras vs. Multi-Camera Vision Systems and Other Choices

Every vision system has one or more image sensors that capture pictures for analysis and all include application software and processors that execute user-defined inspection programs or recipes. Additionally, all vision systems provide some way of communicating results to complementary equipment for control or operator monitoring. But there are many types of vision systems on the market, and choosing among them can be confusing.

Christopher Chalifoux, international applications engineer for vision systems maker Teledyne DALSA, authored a whitepaper to help users make better choices and improve implementation success. He classifies vision solutions into two categories: those with a single embedded sensor (also known as smart cameras) and those with one or more sensors attached (multi-camera vision systems).

“The decision to use one or the other is dependent not only on the number of sensors needed, but also on a number of other factors including performance, ownership cost and the environment where the system needs to operate,” says Chalifoux. “Smart cameras, for example, are generally designed to tolerate harsh operating environments better than multi-camera systems. Similarly, multi-camera systems tend cost less and deliver higher performance for more complex applications.”

Another way to differentiate the two classes of systems is to think in terms of processing requirements. “For many applications, such as in car manufacturing, it is desirable to have multiple independent points of inspection along the assembly line. Smart cameras are a good choice as they are self-contained and can be easily programmed to perform a specific task and modified if needed without affecting other inspections on the line. In this way processing is "distributed" across a number of cameras,” says Chalifoux.

Similarly, other parts of the production line may be better suited to a "centralized" processing approach. “For example, it is not uncommon for final inspection of some assemblies to require 16 or 32 sensors. In this case, a multi-camera system may be better suited as it is less costly and easier for the operator to interact with,” he says.

The most important consideration when selecting a vision system may be the software. The capabilities of the software must match the application, programming and runtime needs. If you are new to machine vision or if your application requirements are straightforward, you should select software that doesn't require programming, includes core capabilities (i.e. pattern matching, feature finding, barcode/2D, OCR) and can interface with complementary devices using standard factory protocols, says Chalifoux.

For more complex needs and those who are comfortable with programming, look for more advanced software package that offer additional flexibility and control.

“It is important to know that there are significant and important differences between vision systems that make one more suitable than another for any given application. It is equally important to know and appreciate the importance of choosing the optimal sensor, lighting and optics for the job. Failure to do so may result in unexpected false rejects, or even worse, false positives,” says Chalifoux.

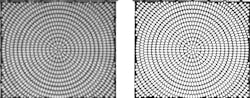

The whitepaper goes into implementation factors, such as image sensor resolution, that should be considered. Image sensors convert light collected from the part into electrical signals. These signals are digitized into an array of values called “pixels” which are processed by the vision system during the inspection.

“Image sensors used by vision systems are highly specialized, and hence more expensive than say, a web cam,” says Chalifoux. “First, it is desirable to have square physical pixels. This makes measurement calculations easier and more precise. Second, the cameras can be triggered by the vision system to take a picture based on a part-in-place signal. Third, the cameras have sophisticated exposure and fast electronic shutters that can 'freeze' the motion of most parts as they move down the line.” Image sensors are available in many different resolution and interfaces to suit any application need.

About the Author

Renee Bassett

Managing Editor

Leaders relevant to this article: