Why this article is worth reading:

- Understand how the industrial metaverse, AI and robotics are transforming manufacturing processes via practical applications of digital twins and simulation tools that enable companies to design and validate production environments before physical implementation.

- Get insights on real-world applications, including how a major consumer goods manufacturer is using these technologies to collaborate remotely and improve design processes.

- Learn how these metaverse technologies help create safer working environments by simulating interactions between robots and humans, as well as how emerging AI capabilities will enable more autonomous robots that can perceive their environment and make independent movement decisions.

The industrial metaverse enables seamless cross-organizational collaboration, real-time visualization and simulation, collaborative design and engineering, and provides a centralized ground truth for information. The industrial metaverse combines digital twins, IoT sensors, and high-fidelity visualization to create a new way of working.

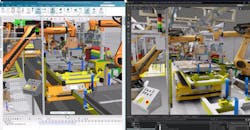

For example, take a battery production scenario using Siemens Process Simulate where manufacturers can leverage the physics-based digital twin within a virtual shared space. Using a digital twin built with Siemens Xcelerator software, interactions between robots, AGVs and humans in the production cell are accurately simulated. The battery pack assembly line is then modeled in NVIDIA Omniverse, which connects seamlessly with Siemens digital manufacturing tools. Siemens Tecnomatix Connector to NVIDIA Omniverse transfers Process Simulate studies into Omniverse so that users can enjoy realistic and high-fidelity visualization simulations.

To explore how these technologies are reshaping digital manufacturing, specifically in the robotics space, Automation World (AW) connected with Alex Greenberg (AG), director of robotics 4.0 simulation at Siemens Digital Industries Software, for a recent episode of the Automation World Get Your Questions Answered podcast.

Following are highlights from our conversation.

AW: There was a lot of discussion about the possibilities for the industrial metaverse a few years ago, but since then the focus seems to have been more on the development of the underlying technologies that enable it. Can you give us an update on where things stand now?

AG: First, it’s important to understand that the industrial metaverse is not something new that just emerged. It’s more of an evolution of technologies that have existed for at least a couple of decades, such as simulation — the 3D digital environment where engineers can interact with each other or simulate their processes and introduce virtual robots and virtual humans. Here, engineers can alter and validate production processes.

What’s happened in the last few years is that the rise of AI introduced new opportunities. That's why when we talk about the industrial metaverse, it's not only about simulation, it’s also about the AI available using co-pilots or AI-based assistants that help engineers in their daily tasks to make them more efficient, help them make better decisions and collaborate. Engineers can now immerse themselves in industrial metaverse environments and work on projects collaboratively with photorealistic surroundings.

AW: How can simulation ensure the effectiveness of robots in a manufacturing production environment?

AG: Let me use Siemens Process Simulate as an example. Using this technology, engineers can develop, simulate and validate automated processes within a real-world manufacturing context.

This real-world distinction is important because, in the model world, when you deal with robots, you don't have the bandwidth to install physical equipment or build and test alternatives. It would be far too expensive to do this with modeling.

But by shifting left into the digital twin or 3D environment, you can build your manufacturing line with all the robots, equipment, conveyors and human operators and test multiple alternatives to fully optimize your processes.

This reduces the risk of problems after implementation, and it removes the cost of fixing those mistakes or having to debug robot problems after installation.

AW: Can you explain a bit more about how simulation is used to validate programs and ensure safe robot operations?

AG: One of the major concerns related to robots on the shop floor is that you need to make sure they operate with the right safety standards to protect humans and themselves from damage. That’s why safety technologies are being delivered by robot vendors. But these safety measures assume that you’ll restrict the robot’s motions to prevent them from moving into protected spaces.

This can be difficult to achieve on the shop floor for a number of reasons, and that’s where simulation comes in. You can simulate and validate manufacturing environments with restricted areas where the robot is not allowed to move. This means that the safety features in the robot are addressed and are part of the planning process prior to validation of the robot programming within the simulation environment.

AW: What about humans in a production environment? Can you simulate their potentially random movements around robots to further ensure safety?

AG: Yes, not only can you randomize robot movements, but you can also simulate and assess the tasks of the human operators. And if there are other pieces of equipment or other robots in the vicinity, you can optimize for those as well.

An important practice in these simulations is to plan for minimizing any interferences between humans and machines. This involves randomizing the motions of human operators to see what happens when there is a safety breach and test those scenarios.

For example, if a person breaches a safety zone, you want to make sure the robot stops or that an access door to machinery is not being blocked in a way that allows a human hand to be pinched inside. Using simulation, all these aspects again can be shifted left to the design environment to reduce the risk of something going wrong on the shop floor.

AW: Getting back to the industrial metaverse and virtual reality technologies, what role do they play in these simulations prior to robot deployment and their use following installation?

AG: One of our major manufacturing customers in the consumer goods space was facing challenges because they couldn’t meet face to face with their supplier, who was located in another country. This made it difficult to communicate the design and build of the workstations effectively.

The traditional way of designing these workstations did not involve these advanced technologies, so virtual reality technologies were used to establish a collaborative, immersive environment, where engineers can meet remotely wearing headsets and immerse themselves into their designs.

Once the manufacturer designed the stations for the customer, they could both look at it remotely to assess the design and recommend adaptations about what needs to be fixed. In addition, a virtual operator can be created based on a human wearing sensors to capture the motions of a shop floor worker. This data can be streamed into the simulation environment to test it with the virtual equipment.

This technology stack establishes the capability to collaborate in the industrial metaverse to make educated engineering decisions without traveling and before any physical equipment was installed.

AW: Where does AI figure into robot training and deployment?

AG: There have been major breakthroughs recently that are making robots more autonomous by improving their ability to perceive their environment and make movement decisions using onboard intelligence.

While these capabilities are still developing, we will soon see robots that can autonomously pick products from unstructured boxes (containers) and place them on shelves or into parcels. We’ll see the evolution of humanoid robots equipped with this intelligence — what we call physical AI — that enables them to perceive the environment and translate it into physical actions.

Where AI-enabled robots and simulation meet, simulation can create virtual worlds in which these robots can be trained and validated in various scenarios to perform various tasks. Engineers can also test the robot’s perception and behavior based on feedback from the virtual environment to enhance their training and optimize their capabilities.

Imagine a robot that can perform certain manufacturing tasks in an autonomous way, we want to know what will happen if we introduce this robot into different manufacturing stations. Here, instead of explicit programming, you’re testing and proving the autonomous capabilities of the robot within a specific manufacturing context.

The convergence of the industrial metaverse, artificial intelligence, and robotics marks a transformative era in manufacturing — one where Siemens stands at the forefront of innovation. AI is no longer just an add-on; it’s helping drive intelligent automation in manufacturing simulation. Specifically, Siemens is leading the way in driving the foundation of industrial AI, which includes adapting AI technologies, including generative AI, machine learning and machine vision, to industrial settings.

For example take agentic AI, which refers to the use of AI systems that possess a certain level of autonomy and decision-making capabilities in the industrial context. Agentic AI can help proactively troubleshoot, simulate, and optimize manufacturing processes without needing to be prompted by a manufacturing engineer.

Explore how Siemens is pushing the boundaries of developing Industrial AI and how these pivotal technologies will contribute major value in the manufacturing planning and simulation space.