Is Our Approach to Artificial Intelligence Wrong?

In the world of industrial automation, artificial intelligence (AI) plays a growing role. At first glance it may not seem very ubiquitous, but that’s because the AI technology is largely hidden from view—embedded within a device or software to give it new capabilities. Though we may not yet be using AI as depicted in science fiction movies, it’s no longer a rare feature in automation technologies and we are interacting with it at an increasing rate.

To understand what I mean, think about the all ways AI is being used in automation today, for example: Advantech’s work with Nvidia to bring AI to its cloud and edge computing products, Aveva’s incorporation of AI into its Asset Performance Management Suite, Epicor adding AI technology to its ERP software, Festo’s combination of AI and pneumatics to aid human/robot interactions, HPE’s addition of AI to many of its enterprise computing products, Omron’s new controller for predictive maintenance applications, PTC adding AI to its CAD software, Siemens adding a neural processing module to its S7-1500 controller, and the enablement of new capabilities in industrial inspection drones. This list is by no means exhaustive, but it does help illustrate the breadth of AI’s application across a variety of technologies used in the discrete manufacturing and processing industries.

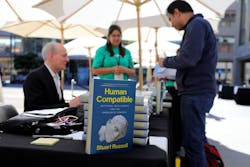

“Turing was unequivocal about this,” said Russell. “We [i.e., humans] lose. So, you have to wonder why we’re doing AI at all. Have we really thought about what might happen? If we make AI [increasingly] better, we lose; so, we have to take concrete steps [to guard against this].”

Though Russell’s comments about AI were not all doom and gloom—we can still prevent the outcome Turing warned about—he did note the path we’re currently on will not prevent Turing’s prediction.

“Our approach to AI is fundamentally mistaken; we’ve thought about AI the wrong way,” Russell said. “The standard model of AI is one we borrowed from the idea of machine intelligence in 1940s and 1950s. This idea being that AI is a rational agent created to achieve an objective. But machines don’t have objectives, so we have to plug them in. The problem here is that we don’t know how to set objectives completely to avoid problems.”

He reminded the audience of numerous tales that illustrate how people tend to fail at fully thinking through the ramifications of their plans. For example: Being granted three wishes by a genie, with the last wish being to undo the previous two wishes because of the unexpected consequences; or King Midas’ wish that everything he touch turn to gold, only then to nearly starve to death when all his food turned to gold when he touched it.

Getting to this point with AI will not be a simple task, Russell warned. “A lot of work will need to be done to replace the standard AI model. All the techniques and theorems we’ve used to create AI algorithms need to be recreated,” he said.

A good place to start, Russell suggested, is to be aware of all the situations where we don’t need general purpose intelligence. In other words, the types of applications we’re already outfitting with AI that likely don’t need that kind of intelligence. Then we should focus on confining the application to “a strict box of decisions and actions,” he said.

About the Author

David Greenfield, editor in chief

Editor in Chief

Leaders relevant to this article: